To keep up with AI development, leveraging GPUs has become a top priority for businesses. But it also means navigating a whole new level of complexity at the infrastructure level.

Managing and optimizing GPU resources isn’t an easy task. Lack of visibility and over provisioning in hyperscale cloud environments can lead to inefficient GPU utilization. Add latency from sharing resources, unpredictable costs, and figuring out how to integrate GPU and CPU workloads into the mix, and it gets even more complex – not to mention expensive.

There’s a real need for trusted infrastructure partners that can help organizations leverage AI effectively and achieve the highest possible performance and efficiency for their workloads.

This is why we’ve launched AI Compute (AIC); our bespoke bare metal GPU offering that combines industry leading consultation and cost-optimized bespoke compute. The result? Maximum performance and cost efficiency, reduced operational complexity and low latency – all underpinned by ongoing expert support from servers.com.

AI Compute is bare metal, dedicated GPU hosting that is built custom to your needs. With no sharing of compute resources, AIC eliminates any risk of resource contention. And as a custom solution offering full hardware control, AIC provides the reliable and enhanced compute power, memory and storage needed for high-intensity AI workloads.

Our AIC service is built on the same dedicated bare metal hardware that we use as part of our Enterprise Bare Metal (EBM) service. Just like EBM, businesses using AIC rent out and maintain sole tenancy over entire physical servers that can be customized based on specific business and performance criteria.

But while EBM brings the powerful baseline CPU-led compute, AIC introduces GPU configurations to run compute-heavy AI workloads.

AIC architectures are also tailored to specific machine learning use cases, training pipelines and model sizes with optimized CPU, memory and storage configurations. These configurations support all major machine learning frameworks including PyTorch, TensorFlow and ONYX. Most significantly, our AIC service allows for the selection and use of any available GPU unit on the global market.

This makes AIC ideal for a variety of use cases. For example, AIC is perfectly suited to high-throughput training that relies on parallel processing and large datasets, as well as distributed training workloads like large language model (LLM) training.

Beyond training models, AIC also supports vector databases like Weaviate and Qdrant that are essential for building and deploying AI-powered applications.

For most organizations, it’s necessary to run a combination of CPU and GPU processors – not just one or the other. For example, you may need CPUs for general purpose applications and the parallel processing capabilities of GPUs to support concurrent high-performance computing.

If you’re managing these workflows across separate network architectures, this can create additional operational complexity.

This is where utilizing AIC as part of our hybrid bare metal cloud solution is beneficial. By adopting a hybrid bare metal cloud approach, you can mix predictable CPU workloads hosted on EBM, “burst” workloads on Scalable Bare Metal (SBM), and GPU-heavy workloads on AIC, all via a single private network.

Hosting everything on one private network also offers several benefits like enhanced security, more control over your infrastructure, and cost efficiency from reduced latency and data bandwidth costs.

GPUs can be hosted as bare metal GPUs or virtualized GPUs and choosing the right type of GPU deployment for your specific business needs is very important.

For example, if you’re working with highly latency-sensitive workflows, it’s worth considering a dedicated bare metal GPU over a virtualized GPU to avoid performance bottlenecks. However, if you’re looking to spin up a GPU to do some temporary testing then you may well want the virtualized option as it will allow you to spin compute up and down on a near-instant basis.

It’s in shared cloud environments, like hyperscale cloud services, that this process of virtualization takes place. A hypervisor layer sits between the physical hardware and the OS, creating multiple isolated environments called virtual machines (VMs). These VMs share the hardware resources including the GPU resources. Quick to spin up and available for short-term use, virtualized GPUs are ideal for short-term use cases - like testing out a new LLM.

In non-virtualized environments like AIC, the entire physical server and the entire GPU is dedicated to a single user. There is no hypervisor layer between the physical hardware and the operating system (OS), and the application installed on the server has direct access to the GPU resources. With no sharing of resources or ‘noisy neighbors’ to contend with, non-virtualized environments provide more stable and consistent performance. And, by offering full resource isolation, they’re also the best choice for security-sensitive workloads.

There are advantages to bare metal GPU servers and virtualized GPUs. Choosing between them comes down to your specific requirements.

| Bare metal GPU | Virtual GPU | |

|---|---|---|

| Advantages |

|

|

| Disadvantages |

|

|

| Best for |

|

|

There are two key scenarios when we would recommend choosing AIC. The first is when you want to optimize performance and the second is when you want to optimize cost.

Latency from storage and networking bottlenecks, and performance degradation from resource contention, can be a challenge in shared cloud environments. This is because vendors sometimes overprovision finite resources. It means applications running on a VM with a virtualized GPU are more likely to experience patchy performance than applications on equivalent bare metal GPU servers like AIC.

Because AIC runs on dedicated bare metal, resources are fully isolated and resource contention is, therefore eliminated. There’s also greater scope for additional performance optimization through hardware customization.

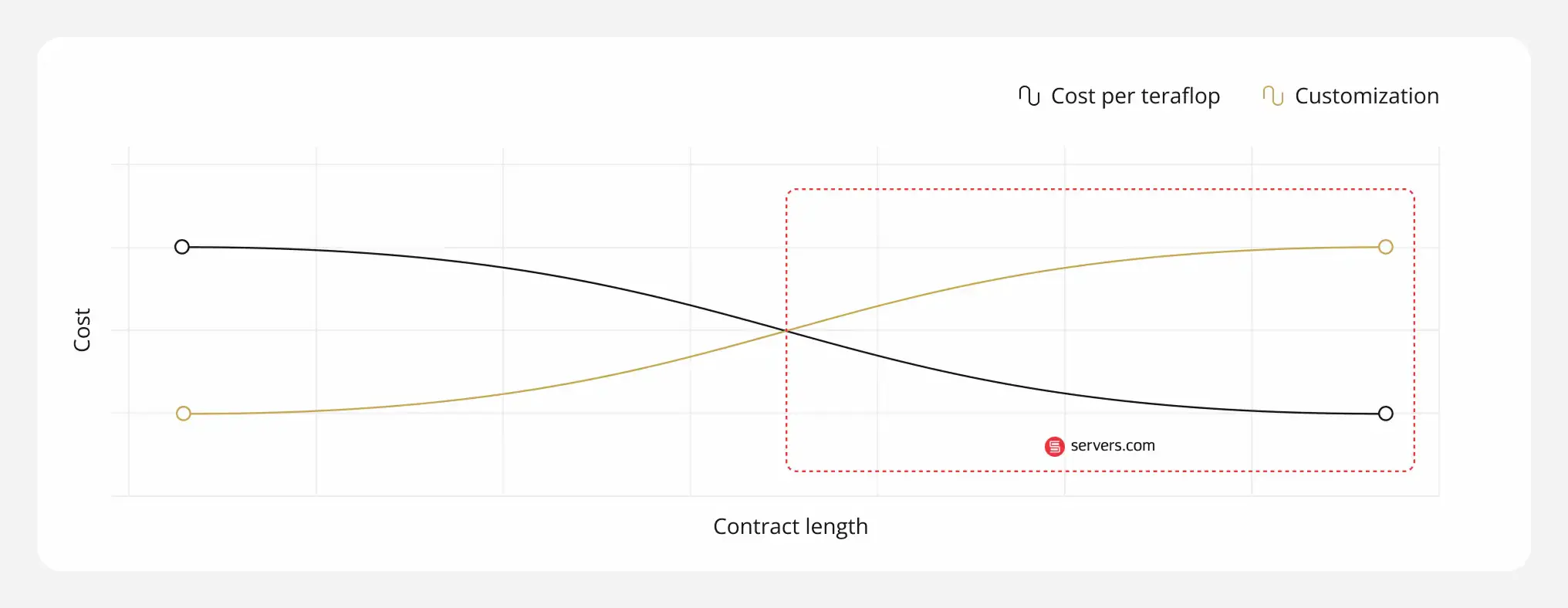

Additionally, having fewer wasted resources and the opportunity to customize your hardware at a granular level also helps drive down overall infrastructure spend. And because AIC is specifically designed for persistent workloads on long-term agreements, there’s no on-demand premium priced in. So, its a more cost-effective source of GPU compute all around.

However, whilst AIC is ideal for supporting ongoing testing and iterations, it’s not built for short term testing. If, for instance, you need to quickly spin GPUs up and down because you’re testing out a new LLM, then AIC won’t be the best solution.

Such cases are better suited to virtualized environments (like those from hyperscale cloud providers), where you can deploy fast and spin compute up and down on a pay-as-you-go basis. Then when your needs stabilize and you’re ready to optimize your GPU usage, migrating over to a solution like AIC will offer long-term performance and business benefits.

In addition to performance and cost optimization, AIC brings additional benefits, including:

With AIC you maintain direct control over your underlying server hardware. You’ll be able to manage your servers remotely via an Integrated Dell Remote Access Controller (iDRAC), configure RAID arrays and format disks according to your requirements.

Our AIC service is underpinned by expert support. Whether you’re deploying machine learning models or hosting vector databases, you’ll always have the support of experienced engineers to consult on AI stack compatibility.

AIC is supported by an enterprise-grade, redundant network architecture ideal for distributed training, clustered inference and large-scale AI pipelines. This ensures minimal latency and high availability for all clustered inference scenarios.

AI development demands serious compute power. But for many businesses, the cost of that compute power remains a significant barrier to wider innovation.

With highly optimized bare metal GPU deployments, AIC is changing this. Ideal for AI-forward organizations with established machine learning workflows, AIC provides access to high-performance GPUs that won’t damage your bottom line.

Frances is proficient in taking complex information and turning it into engaging, digestible content that readers can enjoy. Whether it's a detailed report or a point-of-view piece, she loves using language to inform, entertain and provide value to readers.